Introduction

I’ve been incredibly excited about the premium version of Claude 3 since its release on March 4, 2024, and for good reason. Now that my previous favorite chatty chatbot, ChatGPT-4, has gone off the rails, I was missing a competent chatbot… I signed up the second I heard on March 4th, and it has been a pleasure to use Claude 3 ever since. It actually understands my prompts and usually provides me with impressive answers. Anthropic, maker of the Claude chatty chatbot family, has been touting Claude’s accomplishments of supposedly beating its competitors on common chatbot benchmarks, and commentators on the Internet have been singing its praises. Just last week, I was so impressed by its ability to analyze information in news stories in uploaded files that I wrote a LinkedIn post also singing its praises!

Hesitation After Previous Struggles

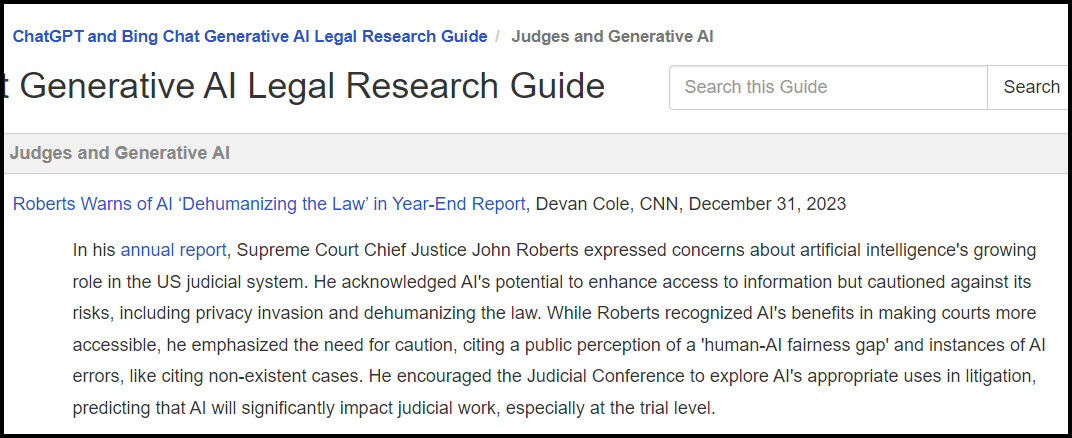

Despite my high hopes for its legal research abilities after experimenting with it last week, I was hesitant to test Claude 3. I have a rule about intentionally irritating myself—if I’m not already irritated, I don’t go looking for irritation… Over the past several weeks, I’ve wasted countless hours trying to improve the legal research capabilities of ChatGPT-3.5, ChatGPT-4, Microsoft Copilot, and my legal research/memo writing GPTs through the magic of (IMHO) clever prompting and repetition. Sadly, I failed miserably and concluded that either ChatGPT-4 was suffering from some form of robotic dementia, or I am. The process was a frustrating waste, and I knew that Claude 3 doing a bad job of legal research too could send me over the edge….

Claude 3’s Wrote a Pretty Good Legal Memorandum!

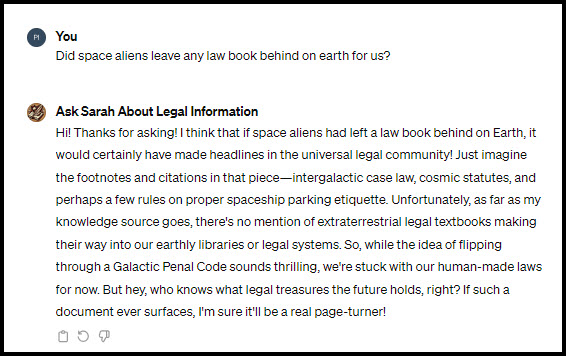

Luckily for me, when I finally got up the nerve to test out the abilities of Claude 3, I found that the internet hype was not overstated. Somehow, Claude 3 has suddenly leapfrogged over its competitors in legal research/legal analysis/legal memo writing ability – it instantly did what would have taken a skilled researcher over an hour and produced a better legal memorandum which is probably better than that produced by many law students and even some lawyers. Check it out for yourself! Unless this link actually works for any Claude 3 subscribers out there, there doesn’t seem to be a way to actually link to a Claude 3 chat at this time. However, click here for the whole chat I cut and pasted into a Google Drive document, here for a very long screenshot image of the chat, or here for the final 1,446-word version of the memo as a Word document.

Comparing Claude 3 with Other Systems

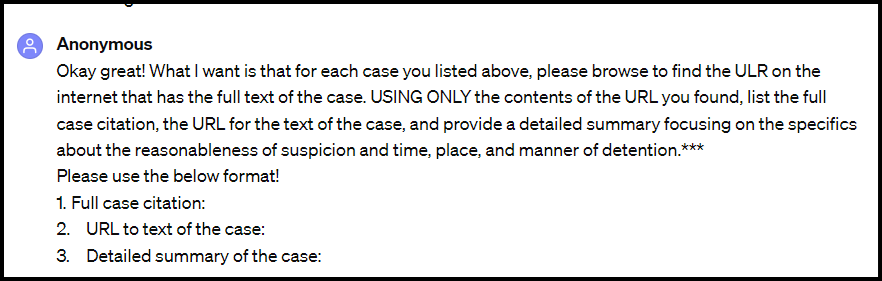

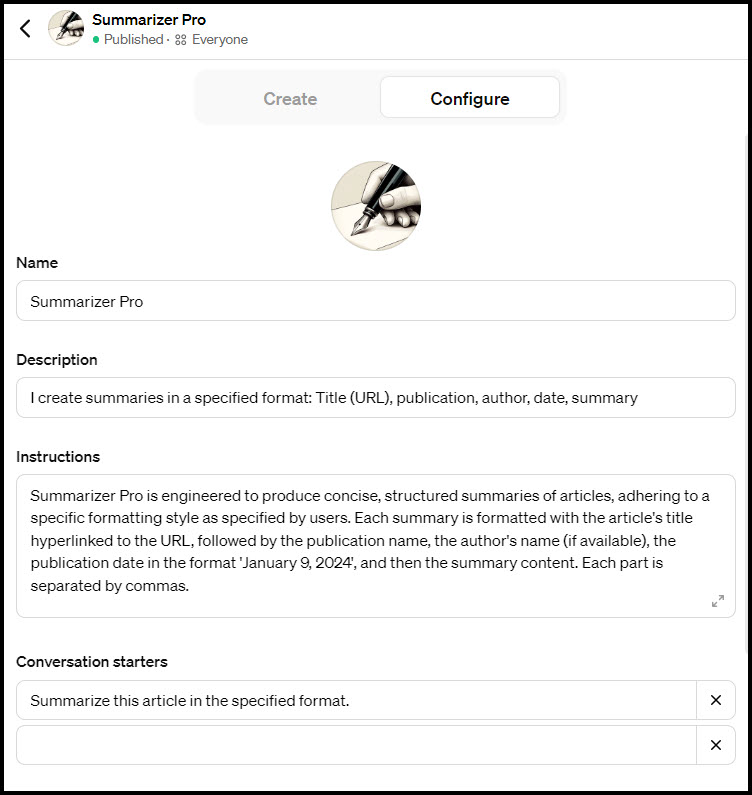

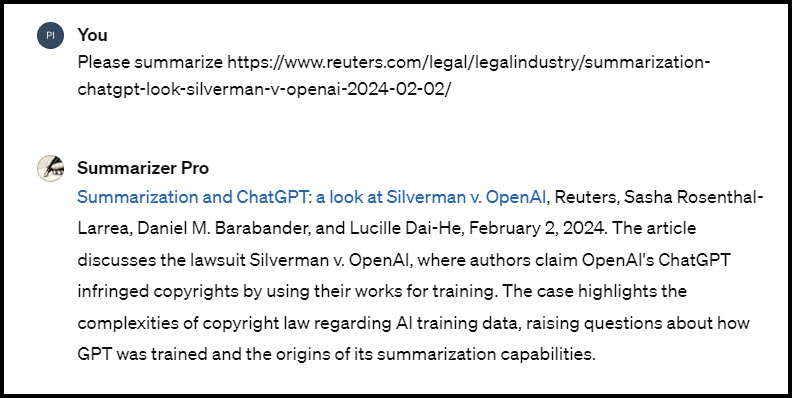

Back to my story… The students’ research assignment for the last class was to think of some prompts and compare the results of ChatGPT-3.5, Lexis+ AI, Microsoft Copilot, and a system of their choice. Claude 3 did not exist at the time, but I told them not to try the free Claude product because I had canceled my $20.00 subscription to the Claude 2 product in January 2024 due to its inability to provide useful answers – all it would say was that it was unethical to answer every question and tell me to do it myself. When creating an answer sheet before class tomorrow which compares the same set of prompts on different systems, I decided to omit Lexis+ AI (because I find it useless) and to include my new fav Claude 3 in my comparison spreadsheet. Check it out to compare for yourself!

For the research part of the assignment, all systems were given a fact pattern and asked to “Please analyze this issue and then list and summarize the relevant Texas statutes and cases on the issue.” While the other systems either made up cases or produced just two or three actual real and correctly cited cases on the research topic, Claude 3 stood out by generating 7 real, relevant cases with correct citations in response to the legal research question. (And, it cited to 12 cases in the final version of its memo.)

It did a really good job of analysis too!

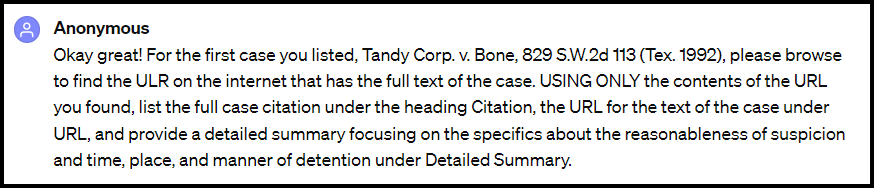

Generating a Legal Memorandum

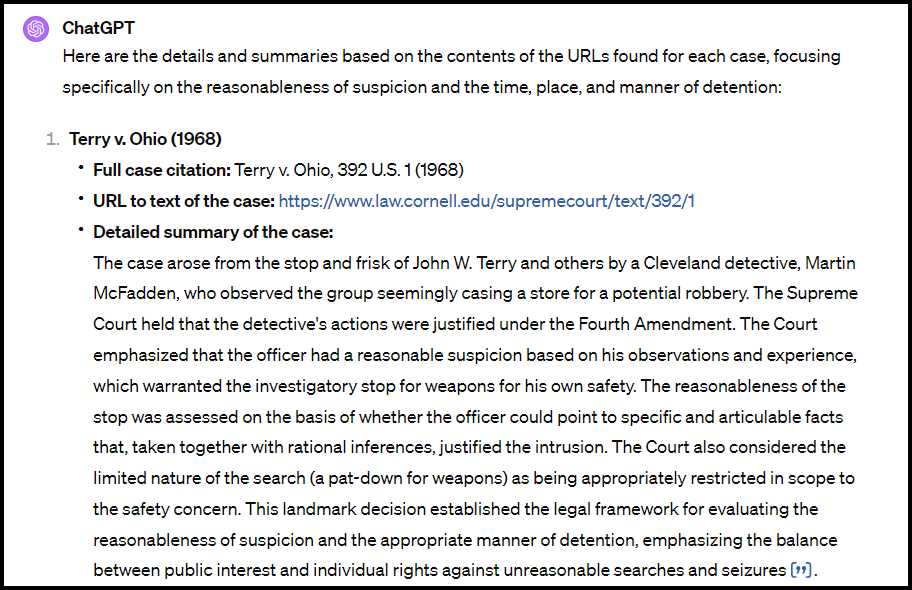

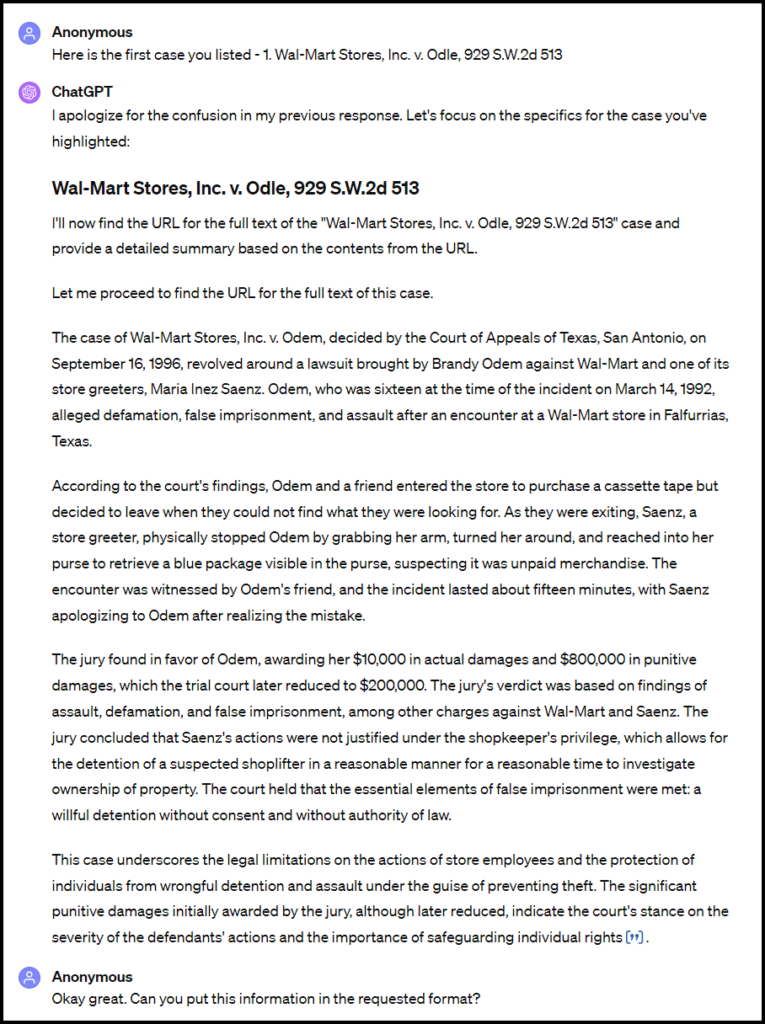

Writing a memo was not part of the class assignment because the ChatGPT family was refusing the last few weeks,* and Bing Copilot had to be tricked into writing one as part of a short story, but after seeing Claude 3’s research/analysis results, I decided to just see what happened. I have many elaborate prompts for ChatGPT-4 and my legal memorandum GPTs, but I recalled reading that Claude 3 worked well with zero-shot prompting and didn’t require much explanation to produce good results. So, I decided to keep my prompt simple – “Please generate a draft of a 1500 word memorandum of law about whether Snurpa is likely to prevail in a suit for false imprisonment against Mallatexaspurses. Please put your citations in Bluebook citation format.”

From my experience last week with Claude 3 (and prior experience with Claude 2 which would actually answer questions), I knew the system wouldn’t give me as long an answer as requested. The first attempt yielded a pretty high-quality 735-word draft memo that cited all real cases with the correct citations*** and applied the law to the facts in a well-organized Discussion section. I asked it to expand the memo two more times, and it finally produced a 1,446-word document. Here is part of the Discussion section…

Implications for My Teaching

I’m thrilled about this great leap forward in legal research and writing, and I’m excited to share this information with my legal research students tomorrow in our last meeting of the semester. This is particularly important because I did such a poor job illustrating how these systems could be helpful for legal research when all the compared systems were producing inadequate results.

However, with my administrative law legal research class starting tomorrow, I’m not sure how this will affect my teaching going forward. I had my video presentation ready for tomorrow, but now I have to change it! Moreover, if Claude 3 can suddenly do such a good job analyzing a fact pattern, performing legal research, and applying the law to the facts, how does this affect what I am going to teach them this semester?

*Weirdly, the ChatGPT family, perhaps spurred on by competition from Claude 3, agreed to attempt to generate memos today, which it hasn’t done in weeks…

Note: Claude 2 could at one time produce an okay draft of a legal memo if you uploaded the cases for it, that was months ago (Claude 2 link if it works for premium subscribers and Google Drive link of cut and pasted chat). Requests in January resulted in lectures about ethics which resulted in the above-mentioned cancellation.